Image processing on the graphics card: GPU beats CPU

Image processing algorithms usually consume a lot of computing resources. In many cases the continuously growing performance of CPUs found in powerful PCs is sufficient to handle such tasks within the specified time. However, leading vendors of image processing hardware and software are constantly on the search for faster ways of improving speed beyond that possible on the PC’s CPU.

Typical methods of increasing speed in image processing include the distribution of the computing tasks between multiple multi core processors, or also the use of specialized FPGAs. Each of these technologies has its own advantages and disadvantages, but all have one aspect in common in that they generally do not use the fastest available processor in the system which is optimized for imaging algorithms namely the processor on the graphics cards, also known as the GPU (Graphical Processing Unit).

These "racers" among the processors have an incredible development history. The evolution has been principally driven by the gaming industry, where the requirements demanded of the graphical representation of game scenes and animations have greatly increased. Sales of Millions of games consoles have contributed to the demand, resulting in large numbers of GPUs and corresponding profits to further boost the development of graphics components. Other industrial sectors are now reaping the benefits, including image processing.

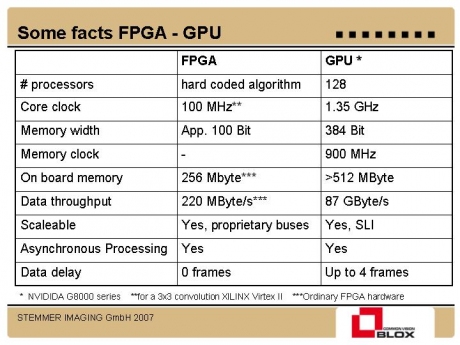

Graphics processors outperform other imaging acceleration methods in many technical aspects, even compared with the fastest available FPGAs (see Table). For example, they are clocked at rates 10 to 20 times faster than that of typical FPGAs, so that in combination with larger memory options can achieve data throughput rates of up to 500 times greater than those of standard FPGAs.

Fig. 1:

Technical comparisons with even the fastest available FPGAs on the market are clearly won by graphics processors for particular criteria.

However, these increased speeds are not fully available to image processing users – the outsorcing of the algorithms to the GPU causes a delay in the data flow, from image capture to data processing. Regardless of this effect, various analyses of intense computing operations indicate a rise in performance by a factor of 2 to 10 when using a GPU in place of a CPU, while the CPU can then be used at for other tasks simultaneously..

There are two principal reasons as to why the GPU technology has only recently become available for image processing. On the one hand, until recently graphics cards had different processors for different tasks. The situation has now changed with the latest graphics processors, such as the GeForce 8800 from Nvidia or equivalents from companies such as ATI. Some of the 681 million transistors on the GeForce 8800 processor can be dynamically allocated for operations such as geometry or pixel computation. On the other hand, the PCI link allows fast data transfer between host and VGA card.

"The architecture of a graphics chip is always very complex," explains Martin Kersting, Head of Development at STEMMER IMAGING. "However, the DirectX-API and the High Level Shader Language (HLSL) compiler from Microsoft together with a handful of functions in our Common Vision Blox software library enable image processing software developers to transfer images between the host and GPU, and therefore use all processors in the system in an optimum way."

As already mentioned, data transfers between the VGA card and GPU cause a certain delay between capturing the image and processing the data on the graphics card. Kersting describes the advantage of the technology as follows: "Skillful use of GPU image processing can mean that special hardware is not even needed in applications with extremely high data throughputs."

To bring the benefits of GPU image processing to developers in the most effective way, the developers at STEMMER IMAGING – the image processing experts based in Puchheim, Germany – have now integrated the functionality in the Common Vision Blox (CVB) software library from the company. "To do so, we added several new functions to CVB that can be called from a CVB application that can be accessed without any additional GPU programming experience," states Kersting.

These functions currently implement tasks such as image filtering, point operations between two images, parallel processing of four monochrome images, transformations from RGB to HSI and from Bayer to RGB formats, so-called flat field corrections, rotation and scaling of images. To optimize the image data transfer between main memory and GPU it is also possible to combine several algorithms within the graphics card by using the open programming possibilities of the HLSL language.

Kersting and his team have carried out multiple tests on possible increases in speed with the new technology. For example, images captured with a monochrome CCIR camera such as the JAI A11 using a PC-based system with an Nvidia 8800 graphics card were upscaled to 2K x 2K pixels and displayed on a PC monitor. At the same time, the graphics processor computed a 3×3 Sobel filter at a rate of 30 pictures/second. In a direct comparison between a 2.4 GHz Intel Core 2 Duo processor and the Nvidia 8800, both units computed a 5×5 filter.

The result impressively demonstrate the possibilities of the cooperation between Common Vision Blox and the new GPU technology: The Nvidia 8800 was about five times faster than the CPU (see Fig. 2)